The RAND spokesperson did not otherwise respond when asked about a partnership on AI safety research with NIST.

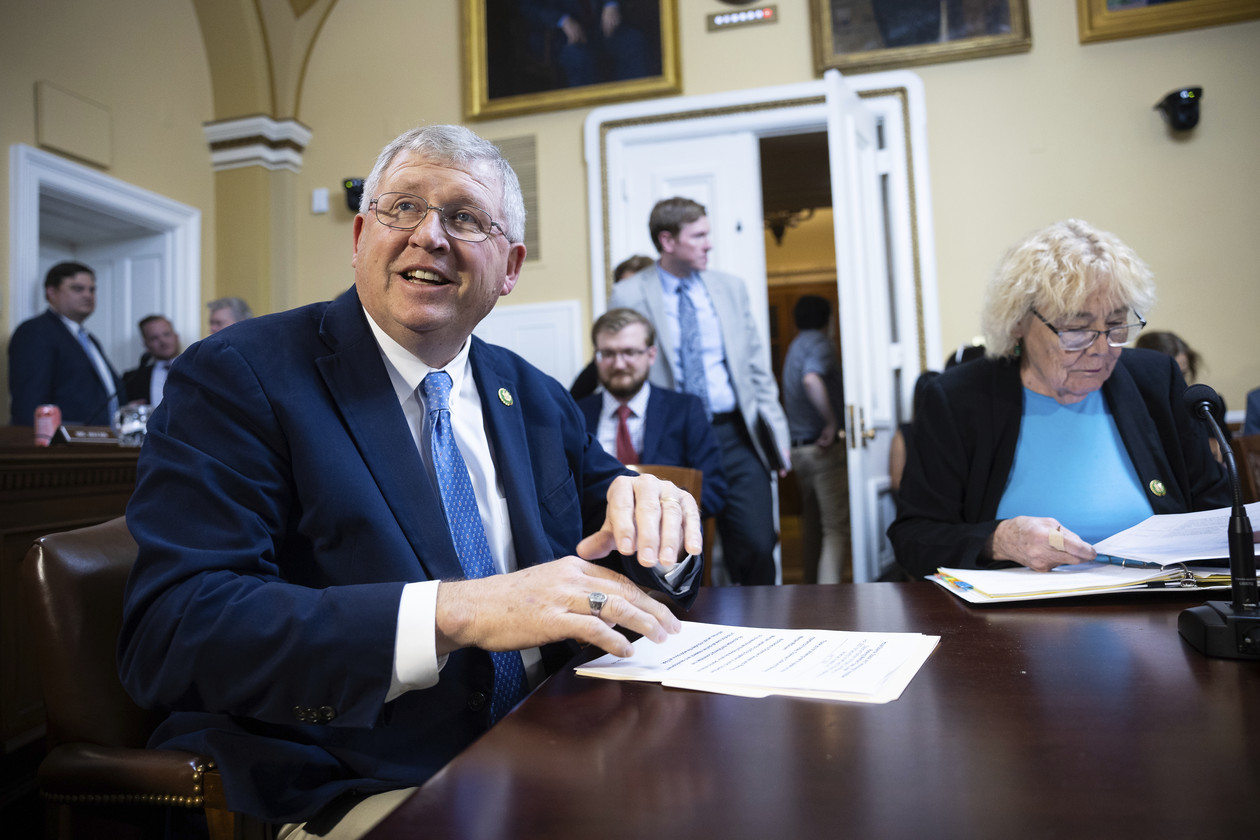

Lucas spokesperson Heather Vaughan said committee staff were told by NIST personnel on Nov. 2 — three days after Biden signed the AI executive order — that the agency intended to make research grants on AI safety to two outside groups without any apparent competition, public posting or notice of funding opportunity. She said lawmakers grew increasingly concerned when those plans were not mentioned at a NIST public listening session held on Nov. 17 to discuss the AI Safety Institute, or during a Dec. 11 briefing of congressional staff.

Vaughan would neither confirm nor deny that RAND is one of the organizations referenced by the committee, or identify the other group that NIST told committee staffers it plans to partner with on AI safety research. A spokesperson for Lofgren declined to comment.

RAND’s nascent partnership with NIST comes in the wake of its work on Biden’s AI executive order, which was written with extensive input from senior RAND personnel. The venerable think tank has come under increasing scrutiny — including internally — for receiving over $15 million in AI and biosecurity grantsearlier this year from Open Philanthropy, a prolific funder of effective altruist causes financed by billionaire Facebook co-founder and Asana CEO Dustin Moskovitz.

Many AI and biosecurity researchers say that effective altruists, whose ranks include RAND CEO Jason Matheny and senior information scientist Jeff Alstott, place undue emphasis on potential catastrophic risks posed by AI and biotechnology. The researchers say those risks are largely unsupported by evidence, and warn that the movement’s ties to top AI firms suggest an effort to neutralize corporate competitors or distract regulators from existing AI harms.

“A lot of people are like, ‘How is RAND still able to make inroads as they take Open [Philanthropy] money, and get [U.S. government] money now to do this?’” said the AI policy professional, who was granted anonymity due to the topic’s sensitivity.

In the letter, the House lawmakers warned NIST that “scientific merit and transparency must remain a paramount consideration,” and that they expect the agency to “hold the recipients of federal research funding for AI safety research to the same rigorous guidelines of scientific and methodological quality that characterize the broader federal research enterprise.”